Compute power is not always used to its potential. We want to use modern software technologies to improve system resource utilization, and performance of running workloads. Our work consisted on developing a cloud platform that achieves this by using container live migration. This work covers the research of container migration techniques, real-time system monitoring and resource provisioning policies. We developed tools to handle those tasks, perform analysis and optimizations of our toolset and evaluated workloads using machine learning to develop compute resource provisioning polices. We then methodically evaluated the performance of our entire platform and developed resource provision policies. We tested our system on two commodity servers running multiple virtual machines to simulate a cloud infrastructure. On this virtualized we tested our platform by running a small number of concurrent container workloads to then test system scaling to thousands of container workloads.

This thesis is organized by the three main components of our container live migration and resource providing platform: Container live migration, resource monitoring and finally resource provisioning. On the first chapter we present an introduction to the problem, terminology and solution. On second chapter we review research literature related to the topics covered by our work. The third chapter discusses our container live migration toolset, migration techniques and optimizations. The fourth chapter discusses our real-time resource monitoring tool and analysis of its performance. On chapter five explores how we analyzed container workloads using machine learning to develop our container placement polices. Chapter five also discusses the testing of our live migration container placement policies and their performance. The last chapter provide a brief conclusion of our work, findings, and future work.

Containers

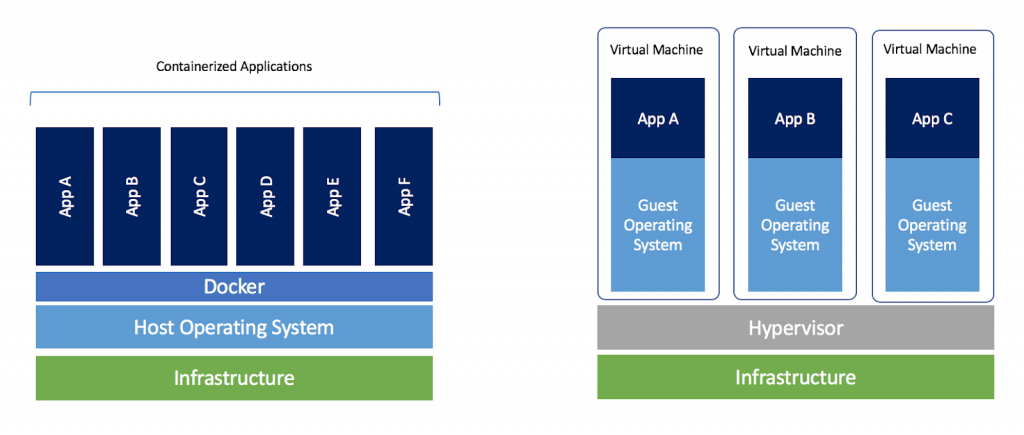

A container is an operating system feature in which the kernel allows the existence of multiple isolated user-space instances [1]. Docker defines a container as “a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another” [2]. Containers are a lighter-weight, more agile way of handling virtualization, compared to virtual machines. Rather than spinning up an entire virtual machine with its hypervisor, kernel and system services; a container packages together everything needed to run a piece of software by sharing the host’s kernel. Containers provide a level of flexibility to handle many software environments and tasks [3].

Figure 1‑1 Containers vs Virtual Machines

Live migration and Application Checkpointing

Application checkpointing consists of saving a snapshot of the application’s state, so that it can restart from that point. Checkpoint/Restore in Userspace (CRIU) is a software tool for the Linux operating system that allows to freeze a running application (or part of it) and checkpoint it as a collection of files on disk [4]. These files can then be used to restore the application and run it exactly as it was during the time of the freeze. The use of a software tool like CRIU for application checkpointing, together with a container manager as Docker, allows a developer to migrate a running container to another host.

Resource Provisioning

Resource provisioning describes the process of assigning appropriate resources to compute workloads. These tasks require the pairing of the best compute resource to a workload based on its requirements. This is a complex job that needs to be optimized for the best use of the available system resources. We monitor in real-time system resource utilization metrics to determine system bottlenecks. We use container live-migration to improve system performance by evaluating the available resources on the infrastructure to better accommodate a container. Resource provisioning evaluation and container migration decisions are determined by our resource provisioning policies. We developed those policies after performing system and container workload characterization experiments.

Machine learning

Machine learning algorithms build a mathematical model of sample data, in order to make predictions or decisions without being explicitly programmed to perform the task. We used machine learning to analyze system resource utilization, it allows us to select the most important features of a given workload to focus our resource provisioning policies on those system metrics. With machine learning we can forecast the performance of a container’s workload on another compute host to improve our container live migration policy..

Problem Statement

Cloud computing has evolved from using virtual machines to containers. Most cloud provisioning services, and software as-a service (SaaS) providers, use containers for their “serverless” services. Current software stacks for managing containers, allow automatic resource allocation by previously selecting host and keeping them in hot-standby. Such case is Kubernetes’ horizontal autoscaler, that uses user-defined metrics, to allocate containers on a set of available hosts using a statistical algorithm. [5]

Current software stacks for container management do not use live migration for performance tuning. Workload managers such as Slurm use application checkpointing for failure migration but not for resource provisioning or resource balancing. [6]

In this work we present the developed of our container live migration platform that sets an ideal configuration of container placement. It manages compute resources for the current running containers based on the available resources so that they are utilized in the most efficient manner; through the use of live migration.

We conducted workload characterization to understand container resource utilization to design a container placement policy for live migrations. We used machine learning for resource feature selection, workload forecasting and develop a methodology to estimate container performance gains with live migration.

This research contributes with the development of a container live migration toolset for Docker. Our toolset optimizes container live migration adds support for network connected containers. We also developed a container state transfer scheme by migration the containers filesystem, in an optimal manner. We present performance analysis of our live-migration techniques. We also contribute with the developed of a lightweight container monitoring tool that supports live migrating containers.

For this research, we used a set of comity machines, servers and IoT devices. Multiple machines act as container hosts and run the live-migration platform. A central machine runs the monitoring software and runs the data processing and provisioning model to perform the resource allocation decisions.

Container Live Migration System

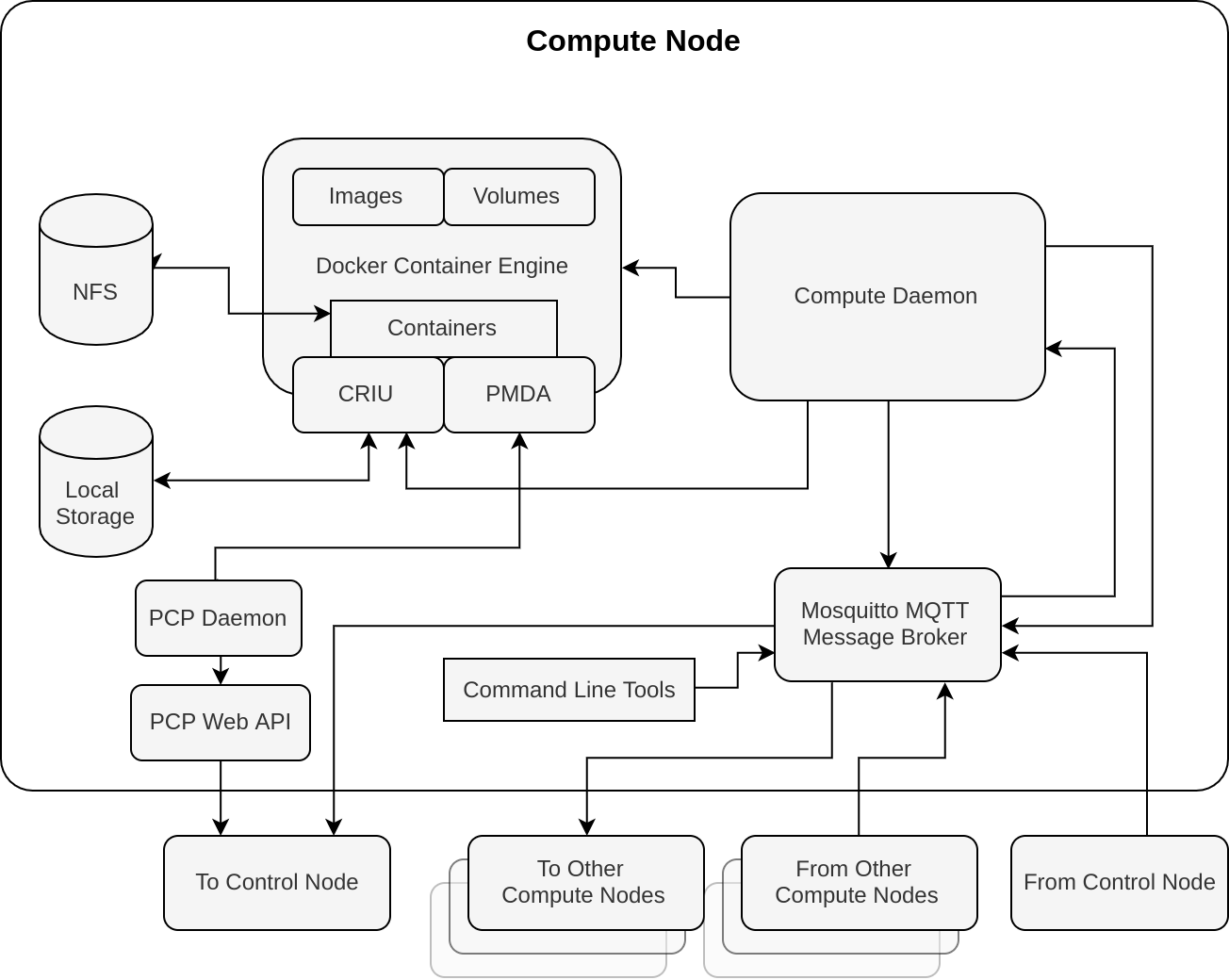

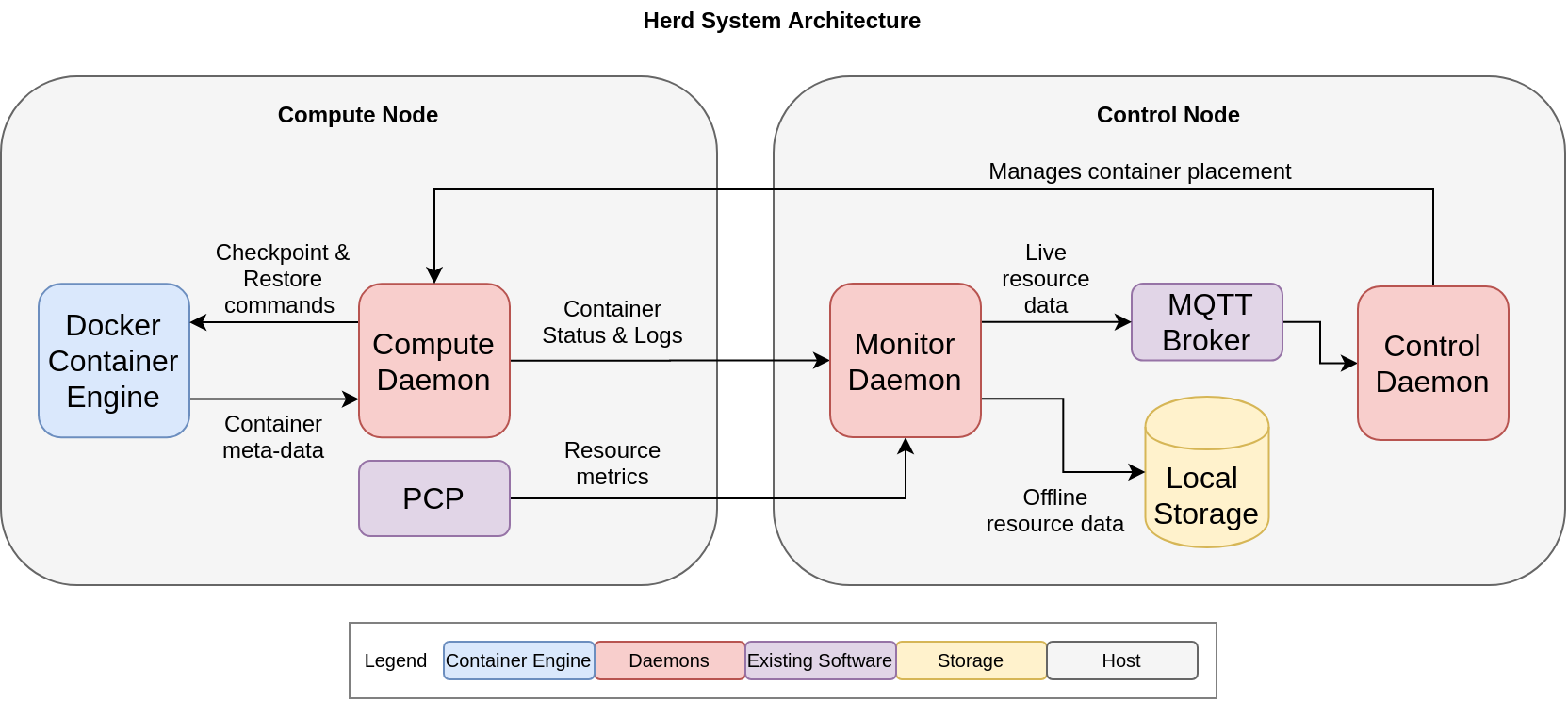

Our live migration toolset defines Compute nodes and a Control node that together act to manage and run containers. The Compute nodes hosts the containers and are responsible for orchestrating the container live migration tasks, as well as receiving communication from the Control node. The Compute node will send status and monitoring information to the Control node.

Figure 1‑2 Compute Node

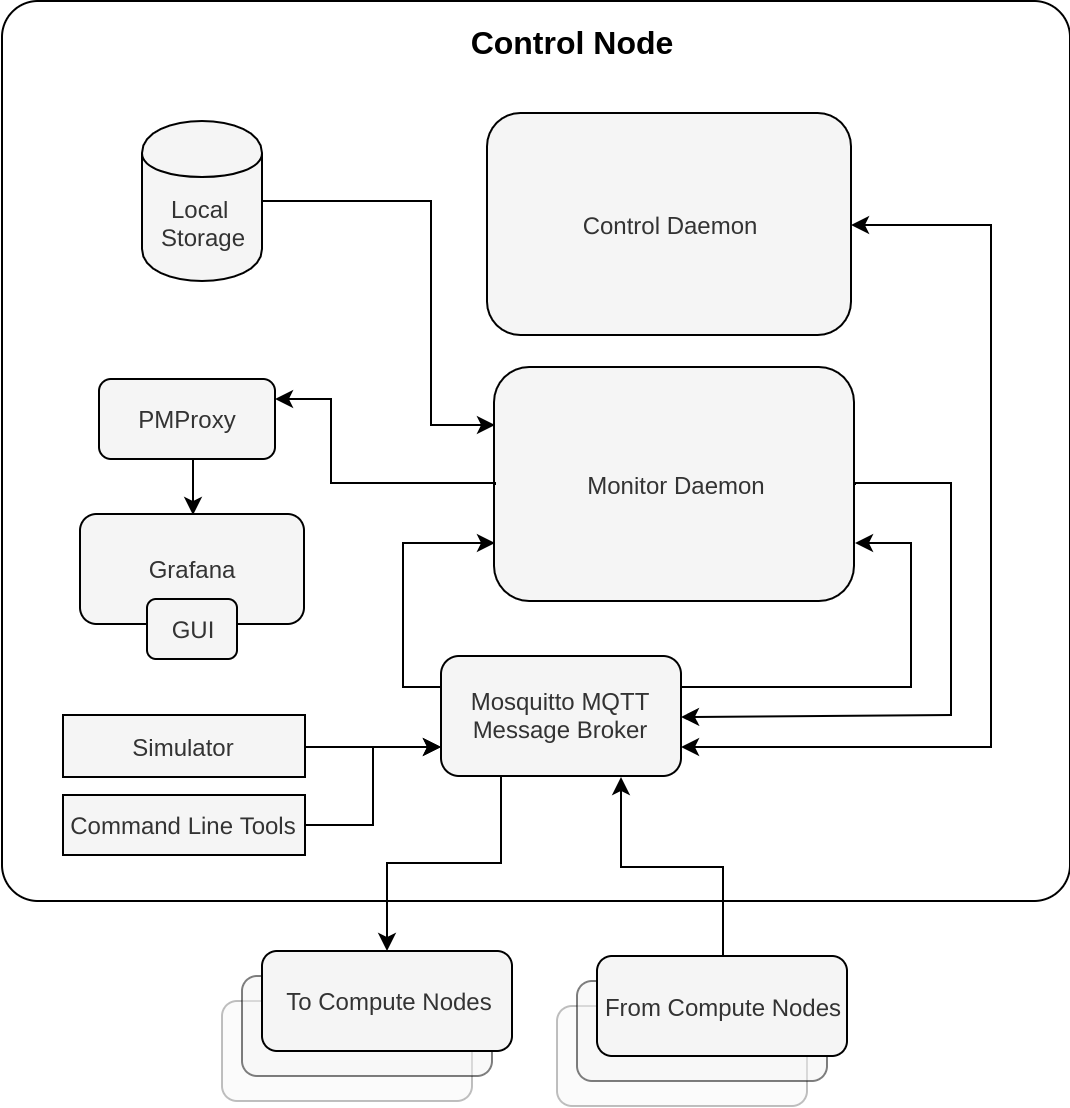

The Control node is responsible for monitoring the containers and Compute node’s performance by processing real-time monitoring information. It determines the ideal container placement configuration based on current and past resource metrics. The Control node sends container migration request to the Compute nodes.

Figure 1‑3 Control Node

All messaging is done through message brokers on the Compute and Control nodes. The Compute nodes manage container image caching and container filesystem transfers to increase performance during the live migration process. Part of the toolset is a set of command line programs to run container live migration from Compute host to Compute host. These tools were used to test our platform components and perform system characterization and resource provisioning experiments. We name our platform Herd, as it manages a herd of containers (Figure 1 -4).

Figure 1‑4 Herd System Architecture

References

-

S. Hogg, “Software Containers: Used More Frequently than Most Realize | Network World,” Network World, 2014. https://www.networkworld.com/article/2226996/software-containers–used-more- frequently-than-most-realize.html (accessed Oct. 28, 2020).

-

Docker Team, “What is a Container? | App Containerization | Docker,” Docker. https://www.docker.com/resources/what-container (accessed Oct. 28, 2020).

-

IBM Cloud Team, “Containers vs. VMs: What’s the Difference? | IBM,” IBM, Sep. 02, 2020. https://www.ibm.com/cloud/blog/containers-vs-vms (accessed Oct. 28, 2020).

-

“CRIU,” criu.org, Apr. 29, 2020. https://criu.org/Main_Page (accessed Oct. 28, 2020).

-

“Horizontal Pod Autoscaler | Kubernetes,” Kubernetes. https://kubernetes.io/docs/tasks/run-application/horizontal-pod- autoscale/#algorithm-details (accessed Oct. 28, 2020).

-

“Slurm Workload Manager,” Slurm, Nov. 24, 2013. https://slurm.schedmd.com/slurm.html (accessed Oct. 28, 202